On vibecoding

"[AI usage] is a tool for [whatever]. There's no liking or disliking it."

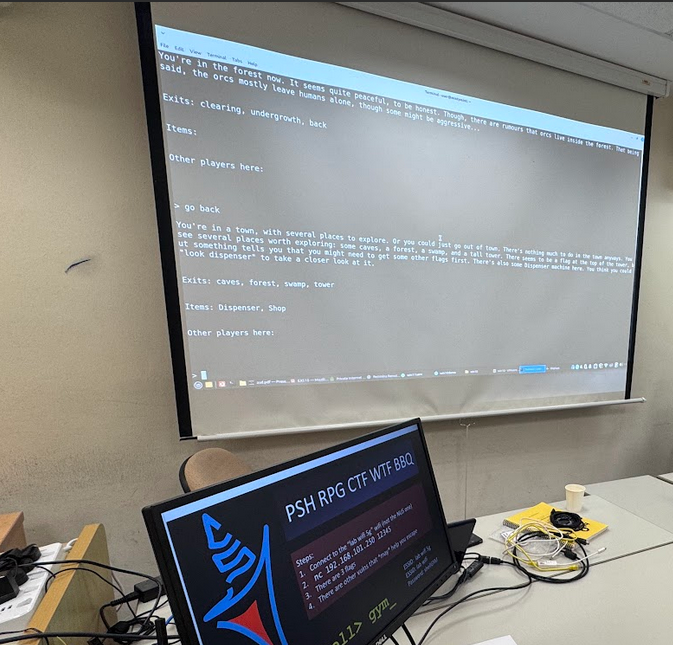

About a month ago, counterShell set up a booth at the NUS Greyhats Summit to showcase a simple CTF challenge that was crafted as a text-based RPG. Think "go caves", "get torch" sort of commands. But in the backend, it was "cd caves" and "move torch.bat %playerinventory%\torch.bat". We had 3 mini challenges as part of the overall RPG, and a meta challenge to "escape" out of the game.The challenge was written in PowerShell, and mostly vibecoded. Before you close this tab in disgust, perhaps let me share a few observations on vibecoding that hopefully will redeem myself.You’re in a town, but actually you’re in a Powershell jail…

Observations

Firstly, vibecoding, as many people before me have pointed out, is not a substitution for proper software development. That's because software developers (and cybersecurity experts) need to go beyond merely writing code. AI approaches do not always manage to break a complex problem into simpler iterations, much less integrate simple components into a large program. The need for critical thinking and a proper problem solving approach is still required to contextualise problems, optimise development, and anticipate new additions to (or refactor) the code base. Vibecoding highly specific, simple chunks of code freed up my thought processes to focus on larger issues at hand, which I find to be incredibly helpful to my workflow (until a bug gets introduced by the AI).

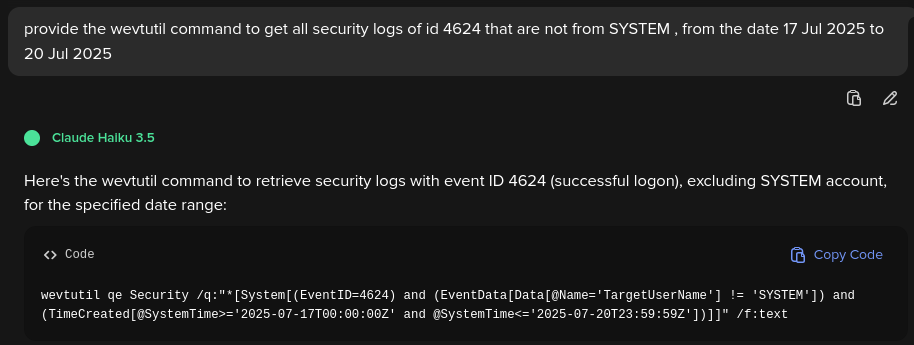

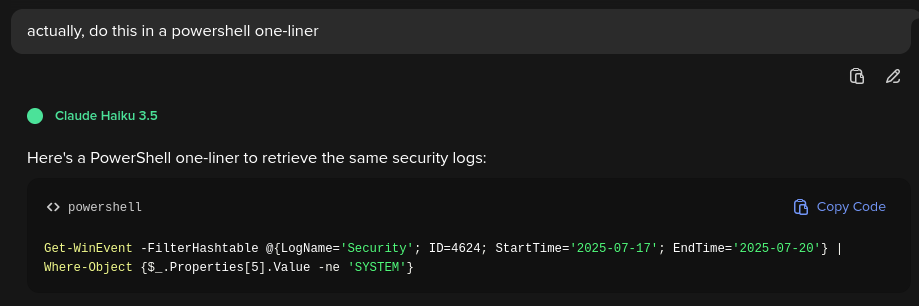

Secondly, vibecoding is no different from searching the Internet for solutions. Some solutions are downright bad and dangerous, and need to be evaluated properly. Your search terms (or prompts) need to be refined. The vibecoding solution I used definitely produced errorneous code, and even worse, vulnerable code. Arguably, the skill of discerning truth from fiction, and even worse, from half-truths, is more relevant than ever before in today's context. My previous experiences with PowerShell helped a lot in spotting and reducing these errors, and in crafting better prompts for the AI to generate proper code that I could use.Who remembers all the wevtutil stuff anyway. But in any case, this is not correct, though, it’s quite close. To be fair, most people won’t use wevtutil. Instead, if you are ok with running PowerShell, prompting for a PowerShell one-liner is a better approach:

This is correct, and I’d just add a “| Format-List” at the end, or some similar way to display output.

Thirdly, I've always seen coding as a creative endeavour especially if you're developing something of your own from scratch, and I enjoy crafting CTF challenges because I enjoy being creative. The same goes for creating training material and exercises. To me, the differentiator of human produced art in the era of AI-generated art is process and inspiration. These must come through in the human product. There must be a thought process and message in the creative endeavour. It cannot be "random BS go". The AI can help in the process and output, but the human must be its source, beyond simple prompting and going "meh, that looks good enough". How this impacts the way we craft our courses

To summarise the whole usage of AI, I'd like to quote something that came out of a certain anime series: "[AI usage] is a tool for [whatever]. There's no liking or disliking it."

Just like "googling" and "wikipedia", the use (and abuse) of AI is readily accessible now, and should be employed, albeit with caution.If you're still reading this, I'd like to contextualise this to counterShell's approach to training. We believe that every learner has the right to use AI tools and search the Internet. This is how the real world works. We prefer our learners to have the agency to explore and get excited as they learn new things that spark joy. In assessments, we want learners to demonstrate a deep understanding and familiarity with their work. Which is why simply submitting assignments or finding flags is not enough. We're interested in the thought process and the intuition our learners have, more so than the actual answers. We're interested to know how learners use AI, what purposes they use it for, and what their discoveries were. This also helps us fine-tune our courses.As we develop the material for the Adversary Simulation, Detection and Countermeasures (ASDC) series of courses, this consideration is always forefront in the material we craft. We are all in favour of learners making use of the resources available to them. Instead, educators and training providers should think about how to assess knowledge, skills and abilities within this real-life environment where AI tools are prevalent. And perhaps even to assess the learner's knowledge, skills and abilities in the employment of AI tools in professional work. In counterShell, we take this seriously, and are working on material that reflects our approach to professional development.